☁ "Nobody wants a Hiroshima moment"

Checking in on Responsible AI, AI Governance, and LLM Security

Move fast… and request regulations?

All new technologies follow a similar path: Build as fast as possible and ask questions later (i.e. pick up the pieces). AI seems to be moving through this hype cycle even faster than prior technologies given the immense rush of funding and the potential for significant impacts the technology has on the economy and society.

Efforts are already underway across stakeholders (government and private corporations) to make sure the technology is being used properly and protected from outside threats.

A few thoughts on:

What is the White House doing?

AI Attack surface map

The 10 ways to attack LLMs

Vulnerabilities by LLM touch points

Marc Benioff remains one of AI’s most ardent supports while calling for guidelines before we face a “Hiroshima” moment to discover how dangerous the technology might be:

Technologies are never good or bad, it’s what we do with them that matters. Nobody wants a Hiroshima moment to understand how dangerous AI is. We want to be able to kind of get our heads around the tremendous consequences of the technology that we are working with. And that’s going to require a multi-stakeholder approach — companies, governments, non-governmental organizations and others to put together the guidelines for this technology. - Marc Benioff, CEO Salesforce (Associated Press)

☁ SaaS Bootcamp: Looking to dive into cybersecurity?

Simplify cybersecurity & learn to model SaaS companies

Join students from Goldman Sachs, Microsoft, Bank of America, Palo Alto Networks, Cowen, SVB, Scotiabank, VCs, and ETF Managers

🚨 Prices increase ANOTHER $100 on Sunday (8/20)! (Use code: SaaS100)

Rushing AI Products carries risks… especially within security

Nick Schneider, CEO of Artic Wolf, is concerned about rushing new AI products to market, especially within the cybersecurity industry where companies (rightfully) have a low risk tolerance for new technologies:

People are rushing to get things into market and a lot of times what’s being delivered is beta or really early versions of AI within their tool sets. In certain industries, that’s fine because there’s very low risk to there being an issue or an error. But in cybersecurity where time, accuracy and efficacy of what’s being delivered to the end user is paramount, especially in the situation where they might be having an issue, you just want to make sure that what’s being put into the market has been vetted properly and has been given enough time to soak within the data. It’s a cautiously optimistic view. - Nick Schneider, CEO Arctic Wolf (CRN)

The White House Response

Lining up early support for new regulation

Tech is well known for “moving fast and a breaking things”. With the sheer power of AI and the potential to disrupt massive parts of society, governments have been stepping in proactively to head off any significant downsides.

Governments (especially the White House) are concerned across multiple vectors including labor (potential for layoffs / dislocations) and human rights (inherent biases, etc.). The Biden-Harris administration secured voluntary commitments from top tech companies to ensure AI products are developed safely and

The meeting and commitments were attended by and secured from 7 top tech and AI firms:

Microsoft president Brad Smith

OpenAI president Greg Brockman

Google president Kent Walker

Meta president Nick Clegg

Anthropic CEO Dario Amodei

Amazon Web Services CEO Adam Selipsky

Inflection AI CEO Mustafa Suleyman

Why is this necessary? Because you never trust people (especially if said people are large tech companies) to grade their own homework. Derek du Preez summed up the state of AI development, potential risks, and coming battle with regulators:

If recent history tells us anything it’s that technology companies can’t necessarily be trusted to mark their own homework, when it comes to responsible development. This is coupled with the fact that fines and penalties from governments are sometimes seen simply as a ‘cost of doing business’ in the pursuit of greater market share. There are going to be serious mistakes made along the way and the real test will be how governments respond to those and correct industry along the path towards safe and fair AI use. Responsible development and use of AI is critical to all of us, but the pace of development will inevitably outpace the creation of effective legislation. Lawyers and the courts will be busy over the next decade, no doubt, but it’s also worth remembering that AI use cases are often already covered by established regulations - including human rights and labor laws. My hope is that these companies recognize that if they to be leaders in AI, building in trust for users from the start is essential. - Derek du Preez (Diginomica)

3 Frameworks for AI Risk

Categorizing High Risk Scenarios

Sandesh Mysore Anand wrote a great piece in Boring AppSec on categorizing and building a framework for protecting and mitigating “high risk” scenarios.

Sandesh noted risks will change based on private vs. public LLMs and how AI is actually being used (individuals prompts vs. integrating APIs).

I would highly recommend reading his full piece and the subsequent resources were found via is “References” section:

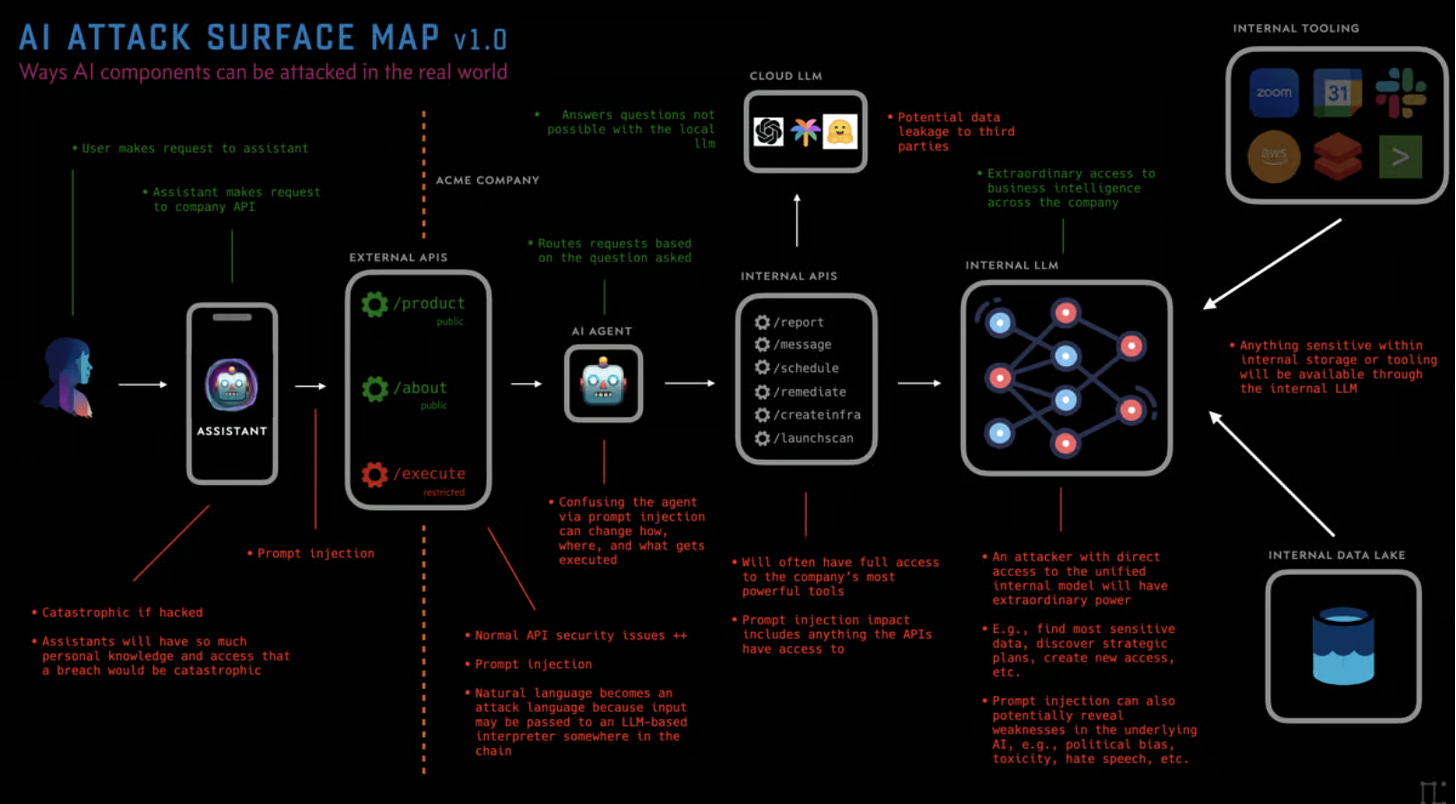

AI Attack Surface Map

Daniel Miessler created an AI Attack Surface map on his blog Unsupervised Learning. He stresses the risk AI poses stretches well beyond just the model and gets much closer to every day life via AI Assistants.

He concludes:

AI is coming fast, and we need to know how to assess AI-based systems as they start integrating into society

There are many components to AI beyond just the LLMs and Models

It’s important that we think of the entire AI-powered ecosystem for a given system, and not just the LLM when we consider how to attack and defend such a system

We especially need to think about where AI systems intersect with our standard business systems, such as at the Agent and Tool layers, as those are the systems that can take actions in the real world - Daniel Miessler

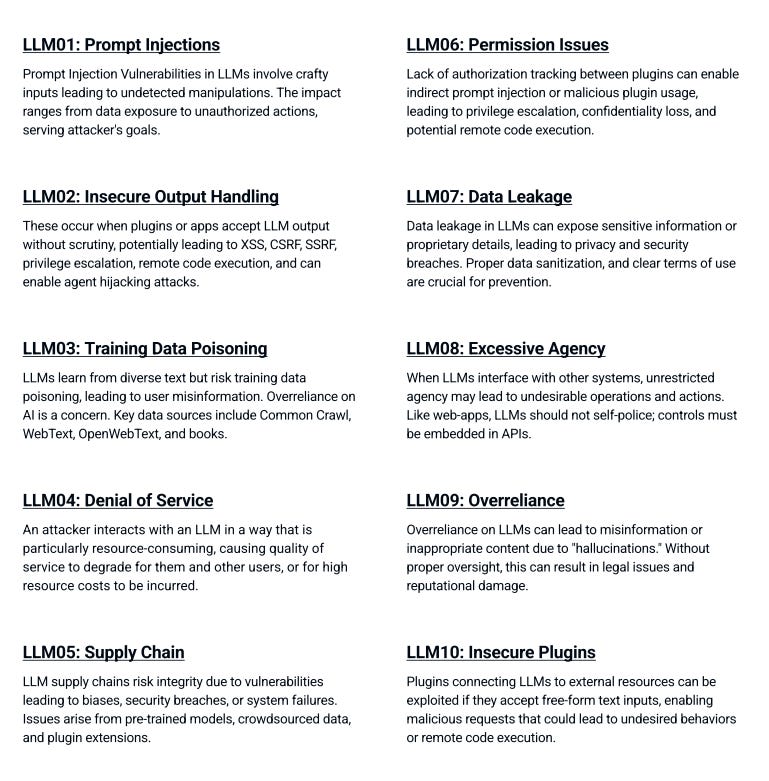

The 10 ways to attack LLMs

Finally, The Open Worldwide Application Security Project (OWASP) published a report on the 10 ways to attack LLMs. OWASP is a nonprofit foundation that works to improve the security of software.

With Blessings of Strong NRR,

Thomas

📢☁ Cybersecurity x SaaS Bootcamp

Simplify the complex world of cybersecurity

Learn to analyze and model SaaS companies

Live Q&A + interactive models/worksheets

Slack networking group

Amazing guest speakers (last cohort we had the CISO of Datadog)

Hosted with FinTwit legend, Francis Odum. A cybersecurity guru with amazing pieces on Palo Alto’s Path to $100bn (quoted by Nikesh Arora, CEO of Palo Alto Networks), SASE technology deepdive, and Microsoft Security reaching $20bn.

🚨 Prices increase ANOTHER $100 on Sunday (8/20)! (Use code: SaaS100)